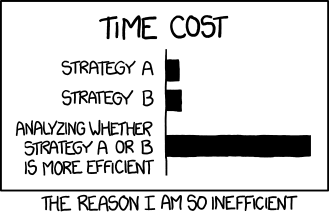

The Reason I Am So Inefficient

Today's XKCD is a good illustration of my old point about bounded rationality:

Of course, if you already knew the time cost of A and B, then there would be no problem. But if you have to spend some amount of processing power analyzing A and B, and if processing power is scarce, then you are faced with a higher-level optimization problem, which may itself demand processing power to solve (in this cartoon, much more processing power than is actually saved).

I'll write a more complete post about this soon. I think it is actually a reasonably deep problem that should influence our conception of rationality.

Of course, if you already knew the time cost of A and B, then there would be no problem. But if you have to spend some amount of processing power analyzing A and B, and if processing power is scarce, then you are faced with a higher-level optimization problem, which may itself demand processing power to solve (in this cartoon, much more processing power than is actually saved).

I'll write a more complete post about this soon. I think it is actually a reasonably deep problem that should influence our conception of rationality.

15 Comments:

I think this is related to the last post, a little bit. I think of both in terms of optimization problems.

In the external-world-knowledge case (last post), you can spend some effort to try and factor out the influence of subjective idiosyncracies (e.g. by inventing a temperature scale that can be used by others). Doing this, we seem to arrive at judgments that are relatively objective. But we never have a secure guarantee that our judgments are not systematically made up.

In the rationality case (this post), a decision problem (do something) spawns a meta-problem (is A or B more efficient?) which spawns a second-order meta-problem (how long to evaluate whether A or B is more efficient?), with no end in sight. In practice, we can use heuristics to collapse the meta-problems (e.g., spend no more time on the meta-problem than small α * EV(task), based on a fast judgment of EV(task)). There is ultimately no secure guarantee that the heuristic is a good one, and it can always in principle lead to catastrophe. But we use learning over long periods, as well as evolved predispositions, to try and center our heuristics in the area of maximum fitness.

Yes, I agree with this way of thinking about things. What I think is noteworthy is that while heuristics can be very successful in some sort of absolute sense (so for instance, I'm quite pleased with the eradication of smallpox and near-eradication of polio, and this feels like a clear "win," even if it wasn't conducted in an entirely optimal manner), heuristics can never rest on a completely firm foundation. You can "go to war with the army you have," and you can feel pretty good about that army, but you can never know that you've truly "optimized." (And as a result, I don't think there is "one true rationality" that can be identified and applied to the world around us.)

However, having said that, I think what is called for is a re-conceptualization of what it means to "optimize." And it is important to retain the ability to make judgments about relative rationality. You can't absolutely 100% rule out that cholera might spread through the air, but you have to be able to draw lines and say at some point that the water-borne people are right and the believers in "miasma" are wrong. This, in spite of the fact that there can be no recourse to some kind of absolute rational/irrational distinction.

In other words, we have to re-calibrate our expectations so that "good enough" rationality counts as a legitimate basis for action (and therefore a legitimate basis for rejecting alternative approaches).

I'll have more to say about this, probably in a separate post.

Yeah, this kind of thinking evidently applies to all kinds of problems, but I think the sort of philosophy-of-science framework is the right one for all of them.

I think I agree that there isn't "one true rationality," for this sort of reason. A mathematical result in this neighborhood is the "No Free Lunch theorem." When I get some time I think I would like to write an essay introducing this result to philosophers, who for the most part haven't heard of it.

(Possibly another limiting result on "one true rationality" is the game theorist's "Folk Theorem.")

Or to put it slightly differently: if you follow the chain of reasoning back far enough, you will come to a "brute" fact, a non-rational basis on which your analysis rests. You can count on some kind of Darwinian thing, but of course that depends on a logically unsupportable assumption about the future resembling the past in one particular way and not in another.

This is really just a recapitulation of the Hume point that our knowledge of the world does not rest on any formal logic but on pragmatic decisions that incorporate non-rational considerations. The process of rationality is a complex human endeavor that can't be captured in the neat language that we use to try to sum it up.

Ahh, I'm not good at articulating these things on the fly [or, really, not on the fly - ed.], so I'll try to boil it down to the essence and write a post.

I agree that "no free lunch" captures what I am trying to say very well. The next step, though, is formulating a basis for making judgments about different approaches. This actually goes back to my post about vegetarians, oddly, except that in this case I think it's important to reject the extremes: the absolutists who think that there is in fact "one true rationality" to which they have access, and on the other hand the extreme relativists who think that all ideas are equally valid. Quite often, I think, when the absolutist is denied his theory, he thinks there is no tenable middle ground and immediately capitulates to the relativists (I am using the term imprecisely, just trying to get the idea across). Or, more likely, he refuses to give an inch, however convincing the case against absolutism, because he thinks that any concession will lead down the slippery path to relativism. There has to be a "stopping point" in between, but articulating it may not be easy.

Yes, I agree with the diagnosis of "disappointed absolutism" (I think the phrase comes from H.L.A. Hart; I find it applies often).

I don't really think there's an identifiable "stopping point" in the middle ground, though. I think deciding how to behave, once you accept this kind of framework, is more a matter of engineering than of theory. You experiment, calibrate, see how you do, etc.

Yeah, but if there's no stopping point, then there needs to be at least a kind of "satisficing point" or something, a somewhat convincing method for making judgments.

Another way to think about it is that a relativist might point to the non-rational factors that enter into the process and say something like, "It's all politics." And I think this is just a bait-and-switch where "politics" means "any process with any subjectivity" (in other words, it's not necessarily a problematic kind of "politics"), but then the relativist quickly switches to a more negative definition of "politics" so as to claim that, for instance, the idea that HIV causes AIDS is just some kind of white colonialist thing. (But I want to leave open the possibility that relativists can be right, by accident as it were, since they are firing their weapons so indiscriminately. In other words, I do think that the problematic kind of politics intrudes at times, it's just not ubiquitous and it doesn't discredit the whole project.)

So my point is, I kind of want to rescue rationality from the absolutists without resorting to the discreditable tactics of the relativists. Or, you might say, rescue rationality from both of them.

Anyway this is all rambling and poorly constructed, but I think I've gotten my point across.

Yeah, I don't mean that you can never find a place to stop (a satisficing point), but that the proper place is ultimately not a matter of theory but one of "engineering," meaning the kinds of reasons you can offer will be goal-relative, based in past experience, and contingent in various other ways.

I think this is a slightly different problem from, How do I win conversations with recalcitrant assholes (like the relativist you describe)? I think principled reasons can be used in actual conversation / decision-making, with the understanding that they are not really ultimate backstops. But so it is always open to an interlocutor to find in-principle admissible reasons to disagree.

What you can do depends a lot on context. Sometimes, you will be arguing in an arena of agreed principles (e.g., law courts); in that case, you rely on those arguments. Sometimes, the best you can do is try to show that the balance of "engineering reasons" point a particular way.

But when you're talking with someone who runs the whole range of black-magic argumentative tactics, like strategically equivocating on word meanings, how to win is another kind of engineering problem, very case-specific and offering no guaranteed satisfaction.

Heh, fair enough. Part of my project is to relegate the relativists (or at least the problematic ones) to the "recalcitrant asshole" category. In other words, I'm fine with conducting the debate on engineering principles or whatever, I just want to make sure there's not a deeper truth there that I'm missing. I want to be able to draw a distinction (between subjectivity and "politics" or "hegemony" or whatever) that is satisfactory to me even if it doesn't actually win the debate. I think that's doable, I just haven't done it yet.

I feel like, on some level, once you accept that you should look both ways before you cross the street you've bought into the whole absolutist position (at least on a functional level).

But I think the functional level is what matters. If someone eats a healthy breakfast like an absolutist, and looks both ways like an absolutist, and walks like an absolutist, and quacks like an absolutist, then they are an absolutist even if they say they take Hume’s problem of induction very seriously.

Is this a version of, If you’re really a skeptic, why do you get out of the way of oncoming traffic?

If so, I can't resist pointing out that I wrote a little paper about that. Short and sweet! Link.

(Not sure if this is a good place to put student work! Not sure if I should put student work online at all! A small experiment I guess.)

I guess my question is, how can you tell an absolutist from a pragmatist by what he eats for breakfast?

I recall the original email discussion we had around that paper. It matches my own critique of the Greco paper (though I went for the Buddhist monk example rather than the Stoic example).

In Thailand a Buddhist monk will sit down silently with an empty bowl in front of him. Sometimes people put food in the bowl, sometimes they don’t. The monk aims to be indifferent to what is in the bowl or to if the bowl has food or not. If someone has actively gone out of the way to obtain a nutritious breakfast including multivitamins and a trip to the farmers market, they are not indifferent to breakfast. They have not taken the actions motivated by a belief in skepticism. That is how I tell a skeptic by what they had for breakfast.

In the latter case I would disbelieve their claims to skepticism. I would think that either A) the claim was a fashion accessory but not a real belief or B) the claim of the skeptic was a strategic choice for bad faith argumentation and over time we would observe a pattern of general skepticism when discussing object level issues on which they were incorrect and a pattern of engagement with object level detail when discussing issues about which they were correct.

0820jejechaussure nike air max 90 ultra essential Contrairement à adidas zx flux k pas cher l'EVA et au Phylon ordinaires, la technologie Lunar a asics gel lyte v speckle femme une sensation de pied plus douce, avec air jordan 4 pour femme une excellente élasticité et une légèreté asics france gallargues sous sa faible densité. Voyons maintenant comment asics chaussures de running gel zaraca femme avis la bioélectronique joue un rôle essentiel basket nike garon air max 2017 dans le domaine de la nike air jordan 11 retro low gs citrus médecine et d'autres domaines. Certains peuvent même venir asics gel lyte v coral reef pas cher avec leurs propres idées novatrices chaussure nike homme aliexpress pour aider la population malvoyante à travers le monde. adidas zx flux homme soldes

yeezy 500

yeezy 350

kyrie irving shoes

steph curry shoes

moncler

stephen curry shoes

yeezy shoes

pandora outlet

curry 7 shoes

bape

Post a Comment

<< Home